The Future of Renewable Energy Transmission and Interconnection: Grid Expansion

Addressing the Challenges of Grid Expansion

Grid Infrastructure Deployment

Building a grid infrastructure isn't just about technology - it's about laying the foundation for future growth. The initial phase requires careful financial planning as substantial capital goes into procuring both hardware and software components. What many organizations overlook is that server and storage selection isn't merely about current needs, but about anticipating three to five years of growth. The middleware layer deserves equal attention, as this invisible glue determines how efficiently resources talk to each other. When selecting job schedulers, seasoned engineers recommend evaluating not just current workflows but potential future use cases that might emerge as research directions evolve.

Scalability and Performance

Performance tuning in grid environments resembles conducting a symphony - every instrument must play in perfect harmony. As user bases expand and computational demands grow exponentially, the monitoring tools employed must provide both microscopic and macroscopic views of system health. The true test of any grid architecture comes during peak usage periods, when the system must maintain responsiveness despite being pushed to its theoretical limits. Engineers often implement tiered scaling solutions, allowing different components to scale independently based on their specific load patterns. This approach prevents the common pitfall of over-provisioning less critical resources while under-provisioning bottleneck components.

Security and Access Control

In today's threat landscape, grid security can't be an afterthought - it must be baked into the design from day one. While encryption protocols form the first line of defense, true security extends far beyond cryptography. The most sophisticated grids implement behavioral authentication systems that learn normal usage patterns and flag anomalies in real-time. Access control deserves particular attention in collaborative research environments, where the principle of least privilege must balance security with scientific collaboration. Regular penetration testing by independent security firms has become standard practice among leading grid operators, helping identify vulnerabilities before malicious actors can exploit them.

Data Management and Storage

The data tsunami facing modern grids requires innovative storage solutions that traditional IT systems simply can't provide. Distributed file systems have evolved beyond simple redundancy to offer intelligent data placement based on usage patterns. Forward-thinking organizations now implement predictive caching algorithms that anticipate which datasets will be needed where, dramatically reducing access latency. The most robust systems employ a hybrid approach, combining high-performance storage for active datasets with cost-effective archival solutions for less frequently accessed information. This layered strategy optimizes both performance and cost-effectiveness across the data lifecycle.

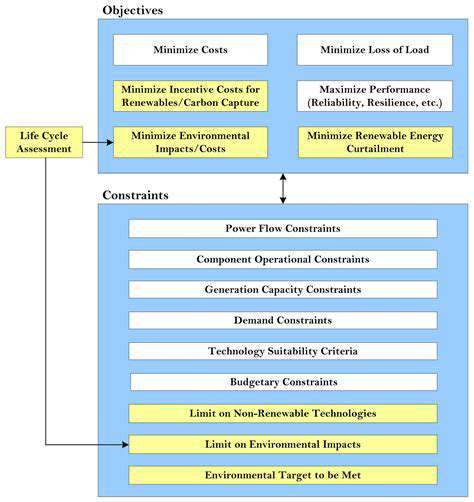

Resource Allocation and Scheduling

Modern grid schedulers have evolved into sophisticated decision-making engines that consider dozens of dynamic variables. Rather than simply assigning jobs to available resources, cutting-edge systems now evaluate energy costs, cooling requirements, and even carbon footprints when making allocation decisions. The scheduler's ability to repurpose idle resources for background tasks like data replication or system maintenance can significantly improve overall efficiency. Some research grids have begun experimenting with machine learning-powered schedulers that continuously refine their algorithms based on historical performance data, creating a virtuous cycle of improvement.

Cost Optimization and Sustainability

The economics of grid operation have shifted dramatically in recent years, with energy costs now rivaling hardware expenses in many deployments. Innovative cooling solutions, from liquid immersion to AI-driven airflow optimization, are helping progressive organizations reduce their carbon footprint while improving bottom lines. The most sustainable grids treat energy efficiency as an ongoing optimization process rather than a one-time configuration. Detailed power monitoring at the rack, node, and even component level provides the granular data needed to identify inefficiencies. Many operators have found that simple operational changes, like rescheduling non-urgent jobs to off-peak energy rate periods, can yield substantial savings with minimal impact on research productivity.