Overcoming Technical Challenges in Decentralization of Energy Generation

Bridging the Gaps: Understanding Interoperability Challenges

Interoperability, the ability of different systems and technologies to seamlessly exchange information and data, is crucial for modern businesses and organizations. However, achieving true interoperability often presents significant challenges. These challenges stem from a multitude of factors, including varying data formats, incompatible communication protocols, and different security standards. Successfully navigating these complexities is essential for creating integrated and efficient workflows.

One significant hurdle is the diverse range of technologies employed across different systems. Each system might use proprietary formats or protocols, making it difficult to translate data between them. This lack of standardization creates a fragmented landscape, hindering the seamless flow of information and requiring significant effort to bridge these gaps. Addressing these technical barriers requires careful consideration of the specific requirements of each system and a commitment to developing common standards.

Furthermore, security concerns play a crucial role in the interoperability conundrum. Ensuring the secure exchange of sensitive data between disparate systems is paramount. Implementing robust security protocols and encryption methods is essential to protect data integrity and confidentiality. This often necessitates a comprehensive review of existing security measures and the development of standardized security protocols across different systems.

Overcoming the Obstacles: Strategies for Effective Interoperability

Despite the challenges, several strategies can help organizations overcome the interoperability conundrum. Developing clear communication protocols and data standards is vital to ensure seamless information exchange. This involves establishing common formats for data storage and transfer, ensuring that all systems adhere to the same rules. It's also important to establish clear guidelines and processes for data exchange.

A crucial step is fostering collaboration and communication among stakeholders. Open dialogue between different departments, teams, and organizations is essential to identify common goals and find solutions to interoperability problems. By working together, organizations can leverage shared expertise and knowledge to create more effective and integrated systems. This collaborative approach can also help to identify and resolve potential conflicts early on.

Implementing robust testing procedures is also a key element in ensuring interoperability. Testing the ability of different systems to exchange data and information can expose potential compatibility issues early in the development process. Thorough testing can help identify and resolve problems before they impact the overall functionality of the system. This proactive approach minimizes disruptions and improves the overall reliability of the integrated system.

Investing in robust training programs for personnel involved in the system integration process is also important. Understanding the nuances of interoperability and the specific tools and techniques involved is critical for successful implementation. Providing comprehensive training enables teams to effectively address the challenges and optimize the integration process.

Scalability and Reliability Challenges in Distributed Networks

Design Considerations for Robustness

Distributed networks, by their very nature, are complex systems involving numerous interconnected nodes and data streams. Ensuring scalability and reliability in such environments requires meticulous design considerations. One crucial aspect is the choice of network architecture. A robust architecture should be able to handle fluctuating loads and maintain consistent performance even under stress. This involves careful selection of protocols, data structures, and algorithms that optimize data transmission and processing across the network. Properly designed fault tolerance mechanisms are also essential to prevent cascading failures and maintain service continuity during node failures or network outages. These mechanisms should be proactive, anticipating potential issues and minimizing their impact.

Furthermore, efficient data replication and consistency protocols are vital. Data replication strategies must be carefully chosen to ensure data redundancy and availability without compromising performance. Mechanisms for maintaining data consistency across multiple replicas are also critical, ensuring that all clients have access to the most up-to-date and accurate information. This often involves implementing sophisticated synchronization algorithms and protocols that can handle concurrent updates and conflicting data changes. Addressing these challenges proactively during the design phase is paramount to building a scalable and reliable distributed network.

Addressing Performance Bottlenecks and Failures

Maintaining high performance and minimizing downtime in a distributed network is a continuous challenge. Performance bottlenecks can arise from various sources, including network congestion, slow processing speeds on individual nodes, or inefficient data transfer mechanisms. Identifying and resolving these bottlenecks often requires a deep understanding of the network's traffic patterns, resource utilization, and the underlying algorithms driving data processing. Implementing monitoring and diagnostic tools that provide real-time insights into network performance is crucial for identifying and addressing issues proactively before they impact users.

Beyond performance, the potential for failures must be proactively managed. Distributed systems are susceptible to various types of failures, ranging from individual node crashes to network partitions. Implementing robust fault tolerance mechanisms is essential to ensure that the network can continue operating even when parts of it fail. This often involves strategies like automatic failover mechanisms, redundant components, and self-healing capabilities. Regular backups and disaster recovery plans are also critical to minimize the impact of unexpected outages and ensure business continuity.

Implementing load balancing strategies is also an important step in addressing potential performance bottlenecks. Properly distributed workloads across the network's resources can ensure that no single node becomes overloaded and helps to maintain the network's overall performance. This is particularly critical in high-traffic scenarios where the demand on the system fluctuates significantly.

Implementing proactive monitoring and alerting systems is also crucial. These systems can provide early warnings of potential performance issues or failures, enabling the network administrators to take corrective actions before significant disruptions occur. This allows for timely adjustments in resource allocation, load balancing, and other crucial aspects to prevent outages and maintain consistent performance.

Finally, careful consideration of security protocols is essential. Distributed networks are often targets for cyberattacks, and robust security measures are needed to protect data and maintain the integrity of the system. Implementing encryption, access controls, and intrusion detection systems is crucial to mitigate the risks associated with network security breaches.

Data Management and Security Concerns in Decentralized Systems

Data Integrity and Verification

Ensuring data integrity and accurate verification across decentralized systems is a significant challenge. Decentralized databases, while offering enhanced security through distributed storage, require robust mechanisms to prevent data manipulation and ensure that all nodes agree on the same data version. Implementing cryptographic hashing and consensus mechanisms are crucial for maintaining data validity and transparency, allowing participants to verify the authenticity and consistency of the information held within the system. This requires careful consideration of the specific data types and the potential for malicious actors to introduce fraudulent or corrupted data.

Privacy Preservation in Decentralized Environments

Maintaining user privacy in decentralized systems is paramount. Data anonymization techniques, differential privacy, and zero-knowledge proofs are essential tools to protect sensitive information while still enabling the desired functionalities. These approaches must be carefully implemented to prevent unintended disclosures or re-identification of individuals, ensuring that the benefits of decentralization do not come at the expense of personal privacy. This requires a thorough understanding of the data being collected and processed, as well as the potential risks associated with different privacy-preserving methods.

Scalability and Performance Considerations

Decentralized systems often face scalability challenges as the number of participants and transactions increase. Efficient data structures and consensus mechanisms are crucial for maintaining performance and responsiveness. Optimistic concurrency control, sharding, and other distributed computing techniques can help mitigate these challenges, ensuring the system remains functional and accessible even with growing demands. Careful consideration of the anticipated load on the system is essential for designing a scalable and performant decentralized architecture.

Security Vulnerabilities and Mitigation Strategies

Decentralized systems are not immune to security vulnerabilities. Issues like Sybil attacks, double-spending, and denial-of-service attacks pose significant threats. Implementing robust security measures, including advanced encryption techniques, access controls, and intrusion detection systems, is critical for safeguarding the system from malicious actors. Thorough security audits and regular vulnerability assessments are essential to proactively identify and address potential weaknesses in the system design, preventing exploitation and maintaining the integrity of the data stored and exchanged.

Governance and Auditing Mechanisms

Establishing transparent and accountable governance mechanisms is vital for decentralized systems. Clearly defined rules and procedures for data access, modification, and dispute resolution are essential. Auditing mechanisms to track changes and ensure compliance with established policies are necessary for maintaining trust and accountability. These mechanisms need to be designed to be robust and adaptable to the evolving needs of the system, while also being easily understood and accessible to all participants. This fosters a sense of trust and encourages active participation in the governance process.

Overcoming the Infrastructure Gap: Addressing Uneven Access

Bridging the Digital Divide: A Critical Need

The digital divide, a persistent chasm between those with access to technology and those without, creates significant disparities in education, employment, and overall well-being. This disparity isn't just about having a computer or internet access; it encompasses the skills and knowledge necessary to effectively utilize these tools. Addressing this fundamental issue requires a multifaceted approach that goes beyond simply providing hardware. We must also consider the educational and training components that empower individuals to navigate the digital landscape.

The consequences of this gap are far-reaching, impacting economic opportunities, social mobility, and even political participation. Closing the digital divide isn't merely a technological challenge; it's a societal one, demanding collaborative efforts from governments, educational institutions, and private sector organizations.

Investing in Infrastructure: A Foundation for Progress

Robust infrastructure is the bedrock upon which a functional digital society is built. This includes not only reliable internet access but also the necessary digital literacy training and support systems. High-speed internet, especially in underserved communities, is essential for accessing online educational resources, job opportunities, and essential services.

Moreover, the infrastructure must be more than just physical. It needs to be resilient, adaptable, and capable of supporting the ever-evolving needs of a digitally driven world. This requires ongoing investment and maintenance, along with a proactive approach to addressing emerging challenges.

Empowering Communities: Fostering Digital Literacy

Simply providing access to technology isn't enough. Individuals need the skills to effectively use these tools and resources. Digital literacy programs must be tailored to the specific needs of each community, addressing the unique challenges and opportunities they face. This means offering accessible and engaging training programs to teach essential digital skills, covering everything from basic computer operation to advanced online research and communication methods.

These programs should be integrated into existing educational systems, ensuring that digital literacy becomes a fundamental part of the curriculum. This approach fosters not just technical proficiency, but also critical thinking and problem-solving skills. It's essential to create a learning environment that encourages continuous learning and adaptation to the ever-changing digital landscape.

Addressing Socioeconomic Factors: A Holistic Approach

The digital divide isn't solely a technological problem; it's deeply intertwined with socioeconomic factors. Poverty, lack of education, and limited access to resources can all contribute to unequal access to technology and digital skills. Tackling this requires a holistic approach that addresses these underlying issues. This can include providing financial assistance for internet access, offering affordable computer devices, and creating supportive community networks.

Furthermore, bridging the digital divide requires actively engaging with communities to understand their specific needs and concerns. This means actively listening to the voices of those most affected and creating solutions that are culturally relevant and accessible. Only by fostering inclusivity and empathy can we truly bridge the gap.

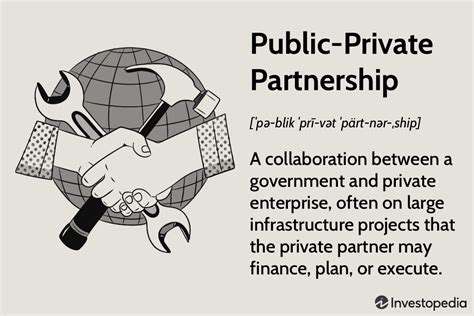

Collaboration and Partnerships: A Shared Responsibility

Closing the infrastructure gap requires a collaborative effort from governments, private organizations, and educational institutions. Partnerships between these entities are essential in sharing resources, expertise, and best practices. Public-private partnerships can leverage the resources and innovation of the private sector to develop and implement effective solutions.

Governments can play a crucial role by establishing policies that promote digital inclusion and provide funding for initiatives that bridge the digital divide. Educational institutions can integrate digital literacy programs into their curricula, fostering a digital-first learning environment. By working together, we can create a more equitable and technologically inclusive society for everyone.